In the real world/digital world cross-over of mixed reality, a user’s immersive engagement with the program is called presence. Now, UMass Amherst researchers are the first to identify reaction time as a potential presence measurement tool. Their findings, published in IEEE Transactions on Visualization and Computer Graphics, have implications for calibrating mixed reality to the user in real time.

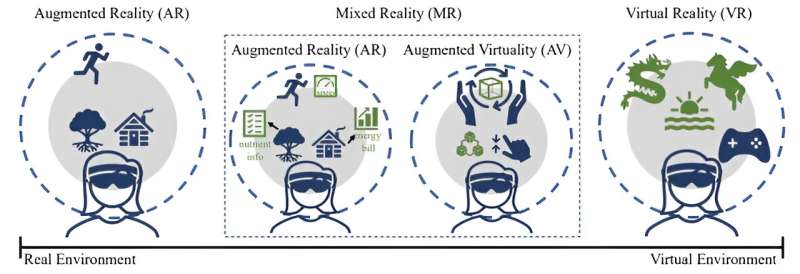

“In virtual reality, the user is in the virtual world; they have no connection with their physical world around them,” explains Fatima Anwar, assistant professor of electrical and computer engineering, and an author on the paper.

“Mixed reality is a combination of both: You can see your physical world, but then on top of that, you have that spatially related information that is virtual.” She gives attaching a virtual keyboard onto a physical table as an example. This is similar to augmented reality but takes it a step further by making the digital elements more interactive with the user and the environment.

The uses for mixed reality are most obvious within gaming, but Anwar says that it’s rapidly expanding into other fields: academics, industry, construction and health care.

However, mixed reality experiences vary in quality: “Does the user feel that they are present in that environment? How immersive do they feel? And how does that impact their interactions with the environment?” asks Anwar. This is what is defined as “presence.”

Up to now, presence has been measured with subjective questionnaires after a user exits a mixed-reality program. Unfortunately, when presence is measured after the fact, it’s hard to capture a user’s feelings of the entire experience, especially during long exposure scenes. (Also, people are not very articulate in describing their feelings, making them an unreliable data source.) The ultimate goal is to have an instantaneous measure of presence so that the mixed reality simulation can be adjusted in the moment for optimal presence. “Oh, their presence is going down. Let’s do an intervention,” says Anwar.

Yasra Chandio, doctoral candidate in computer engineering and lead study author, gives medical procedures as an example of the importance of this real-time presence calibration: If a surgeon needs millimeter-level precision, they may use mixed reality as a guide to tell them exactly where they need to operate.

“If we just show the organ in front of them, and we don’t adjust for the height of the surgeon, for instance, that could be delaying the surgeon and could have inaccuracies for them,” she says. Low presence can also contribute to cybersickness, a feeling of dizziness or nausea that can occur in the body when a user’s bodily perception does not align with what they’re seeing. However, if the mixed reality system is internally monitoring presence, it can make adjustments in real-time, like moving the virtual organ rendering closer to eye level.

One marker within mixed reality that can be measured continuously and passively is reaction time, or how quickly a user interacts with the virtual elements. Through a series of experiments, the researchers determined that reaction time is associated with presence such that slow reaction time indicates low presence and high reaction time indicates high presence with 80% predictive accuracy even with the small dataset.

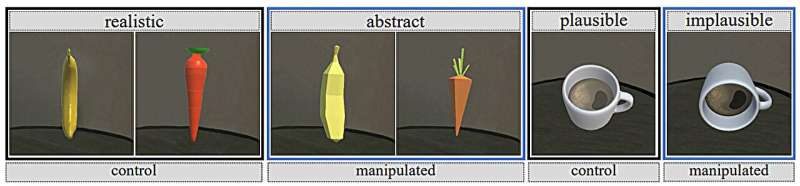

To test this, the researchers put participants in modified “Fruit Ninja” mixed reality scenarios (without the scoring), adjusting how authentic the digital elements appeared to manipulate presence.

Presence is a combination of two factors: place illusion and plausibility illusion. “First of all, virtual objects should look real,” says Anwar. That’s place illusion. “The objects should look at how physical things look, and the second thing is: are they behaving in a real way? Do they follow the laws of physics while they’re behaving in the real world?” This is plausibility illusion.

In one experiment, they adjusted place illusion and the fruit appeared either as lifelike fruit or abstract cartoons. In another experiment, they adjusted the plausibility illusion by showing mugs filling up with coffee either in the correct upright position or sideways.

What they found: People were quicker in reacting to the lifelike fruit than they would to the cartoonish-looking food. And the same thing happened in the plausibility and implausible behavior of the coffee mug.

Reaction time is a good measure of presence because it highlights if the virtual elements are a tool or a distraction. “If a person is not feeling present, they would be looking into that environment and figuring out things,” explains Chandio. “Their cognition in perception is focused on something other than the task at hand, because they are figuring out what is going on.”

“Some people are going to argue, “Why would you not create the best scene in the first place?” but that’s because humans are very complex,” Chandio explains. “What works for me may not work for you may not work for Fatima, because we have different bodies, our hands move differently, we think of the world differently. We perceive differently.”

More information:

Yasra Chandio et al, Investigating the Correlation Between Presence and Reaction Time in Mixed Reality, IEEE Transactions on Visualization and Computer Graphics (2023). DOI: 10.1109/TVCG.2023.3319563. On arXiv: DOI: 10.48550/arxiv.2309.11662

Citation:

Study: Immersive engagement in mixed reality can be measured with reaction time (2023, November 27)

retrieved 25 June 2024

from https://techxplore.com/news/2023-11-immersive-engagement-reality-reaction.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.