Price: 4.82 - 0.99

While roboticists have introduced increasingly sophisticated systems over the past decades, teaching these systems to successfully and reliably tackle new tasks has often proved challenging. Part of this training entails mapping high-dimensional data, such as images collected by on-board RGB cameras, to goal-oriented robotic actions.

Researchers at Imperial College London and the Dyson Robot Learning Lab recently introduced Render and Diffuse (R&D), a method that unifies low-level robot actions and RBG images using virtual 3D renders of a robotic system. This method, introduced in a paper published on the arXiv preprint server, could ultimately facilitate the process of teaching robots new skills, reducing the vast amount of human demonstrations required by many existing approaches.

“Our recent paper was driven by the goal of enabling humans to teach robots new skills efficiently, without the need for extensive demonstrations,” said Vitalis Vosylius, final year Ph.D. student at Imperial College London and lead author. “Existing techniques are data-intensive and struggle with spatial generalization, performing poorly when objects are positioned differently from the demonstrations. This is because predicting precise actions as a sequence of numbers from RGB images is extremely challenging when data is limited.”

During an internship at Dyson Robot Learning, Vosylius worked on a project that culminated in the development of R&D. This project aimed to simplify the learning problem for robots, enabling them to more efficiently predict actions that will allow them to complete various tasks.

In contrast with most robotic systems, while learning new manual skills, humans do not perform extensive calculations to determine how much they should move their limbs. Instead, they typically try to imagine how their hands should move to tackle a specific task effectively.

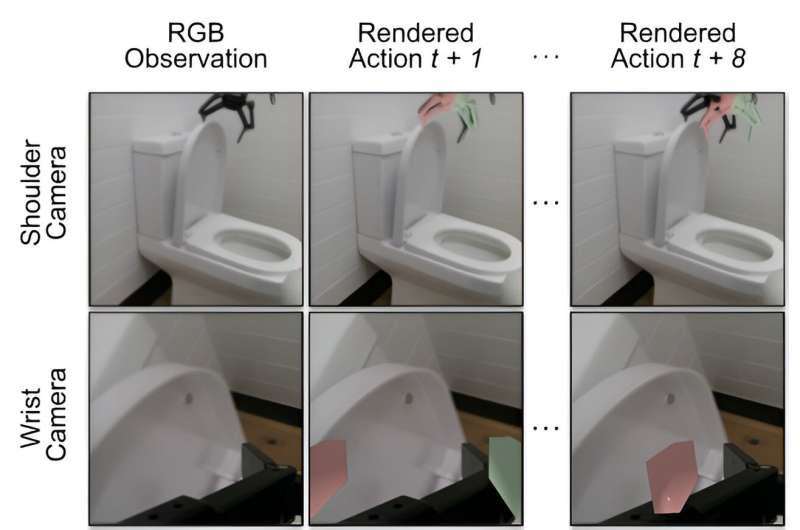

“Our method, Render and Diffuse, allows robots to do something similar: ‘imagine’ their actions within the image using virtual renders of their own embodiment,” Vosylius explained. “Representing robot actions and observations together as RGB images enables us to teach robots various tasks with fewer demonstrations and do so with improved spatial generalization capabilities.”

For a robot to learn to complete a new task, it first needs to predict the actions it should perform based on the images captured by its sensors. The R&D method essentially allows robots to learn this mapping between images and actions more efficiently.

“As hinted by its name, our method has two main components,” Vosylius said. “First, we use virtual renders of the robot, allowing the robot to ‘imagine’ its actions in the same way it sees the environment. We do so by rendering the robot in the configuration it would end up in if it were to take certain actions.

“Second, we use a learned diffusion process that iteratively refines these imagined actions, ultimately resulting in a sequence of actions the robot needs to take to complete the task.”

Using widely available 3D models of robots and rendering techniques, R&D can greatly simplify the acquisition of new skills while also significantly reducing training data requirements. The researchers evaluated their method in a series of simulations and found that it improved the generalization capabilities of robotic policies.

They also showcased their method’s capabilities in effectively tackling six everyday tasks using a real robot. These tasks included putting down the toilet seat, sweeping a cupboard, opening a box, placing an apple in a drawer, and opening and closing a drawer.

“The fact that using virtual renders of the robot to represent its actions leads to increased data efficiency is really exciting,” Vosylius said. “This means that by cleverly representing robot actions, we can significantly reduce the data required to train robots, ultimately reducing the labor-intensive need to collect extensive amounts of demonstrations.”

In the future, the method introduced by this team of researchers could be tested further and applied to other tasks that robots could tackle. In addition, the researchers’ promising results could inspire the development of similar approaches to simplify the training of algorithms for robotics applications.

“The ability to represent robot actions within images opens exciting possibilities for future research,” Vosylius added. “I am particularly excited about combining this approach with powerful image foundation models trained on massive internet data. This could allow robots to leverage the general knowledge captured by these models while still being able to reason about low-level robot actions.”

More information:

Vitalis Vosylius et al, Render and Diffuse: Aligning Image and Action Spaces for Diffusion-based Behaviour Cloning, arXiv (2024). DOI: 10.48550/arxiv.2405.18196

© 2024 Science X Network

Citation:

A simpler method to teach robots new skills (2024, June 17)

retrieved 25 June 2024

from https://techxplore.com/news/2024-06-simpler-method-robots-skills.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

By analyzing 56-million-year-old sediments, a UNIGE team has measured the increase in soil erosion caused by global warming, synonymous with major flooding.

Fifty-six million years ago, the Earth experienced a major and rapid climate warming due to greenhouse gas emissions, probably due to volcanic eruptions. A team from the University of Geneva (UNIGE) has analyzed sediments from this period to assess the impact of this global warming on the environment, and more specifically on soil erosion.

The study revealed a four-fold increase in soil erosion due to heavy rainfall and river flooding. These results suggest that current warming could have a similar effect over time, significantly increasing flood risks. They are published in the journal Geology.

Because of its similarities to current warming, the Paleocene-Eocene Thermal Maximum is closely studied to understand how the Earth’s environment reacts to a global rise in temperature. Occurring 56 million years ago, this episode saw the Earth warm by 5 to 8°C within 20,000 years, a very short time at the geological scale. It lasted for 200,000 years, causing major disruption to flora and fauna. According to recent IPCC reports, the Earth is now on the brink of a similar warming.

Scientists are analyzing sediments from this period to obtain a more accurate ”picture” of this past warming and its consequences and to make predictions for the future. These natural deposits are the result of soil erosion by water and wind. They were carried by rivers into the oceans. Now preserved in rocks, these geological archives provide valuable information about our past, but also our future.

“Our starting hypothesis was that, during such a period of warming, the seasonality and intensity of rainfall increases. This alters the dynamics of river flooding and intensifies sediment transport from the mountains to the oceans. Our objective is to test this hypothesis and, above all, to better quantify this change,” explains Marine Prieur, a doctoral student in the Section of Earth and Environmental Sciences at the UNIGE Faculty of Science, and first author of the study.

The research team studied a specific type of sediment, Microcodium grains, collected in the Pyrenees (around 20kg). These prisms of calcite, no more than a millimeter in size, were specifically formed at this period around the roots of plants, in the soil. However, they are also found in marine sediments, proving their erosion on the continent. Therefore, Microcodium grains are a good indicator of the intensity of soil erosion on the continents.

“By quantifying the abundance of Microcodium grains in marine sediments, based on samples taken from the Spanish Pyrenees, which were submerged during the Palaeocene-Eocene, we have shown a four-fold increase in soil erosion on the continent during the climate change that occurred 56 million years ago,” reveals Sébastien Castelltort, a full professor in the Section of Earth and Environmental Sciences at the UNIGE Faculty of Science, who led the study.

This discovery highlights the significant impact of global warming on soil erosion through the intensification of rainfall during storm events and the increase in river flooding. This is an indicator of heavy flooding.

“These results relate specifically to this area of the Pyrenees, and each geographical zone is dependent on certain unique factors. However, increased sedimentary input in the Paleocene-Eocene strata is observed worldwide. It is, therefore, a global phenomenon, on an Earth-wide scale, during a significant warming event,” points out Marine Prieur.

These results provide new information that can be incorporated into predictions about our future climate. In particular, to better assess the risks of flooding and soil collapse in populated areas.

“We need to bear in mind that this increase in erosion has occurred naturally, under the effect of global warming alone. Today, to predict what lies ahead, we must also consider the impact of human action, such as deforestation, which amplifies various phenomena, including erosion,” conclude the scientists.

More information:

Marine Prieur et al, Fingerprinting enhanced floodplain reworking during the Paleocene−Eocene Thermal Maximum in the Southern Pyrenees (Spain): Implications for channel dynamics and carbon burial, Geology (2024). DOI: 10.1130/G52180.1

Provided by

University of Geneva

Citation:

Geological archives may predict our climate future (2024, June 25)

retrieved 25 June 2024

from https://phys.org/news/2024-06-geological-archives-climate-future.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Boosting the performance of solar cells, transistors, LEDs, and batteries will require better electronic materials, made from novel compositions that have yet to be discovered.

To speed up the search for advanced functional materials, scientists are using AI tools to identify promising materials from hundreds of millions of chemical formulations. In tandem, engineers are building machines that can print hundreds of material samples at a time based on chemical compositions tagged by AI search algorithms.

But to date, there’s been no similarly speedy way to confirm that these printed materials actually perform as expected. This last step of material characterization has been a major bottleneck in the pipeline of advanced materials screening.

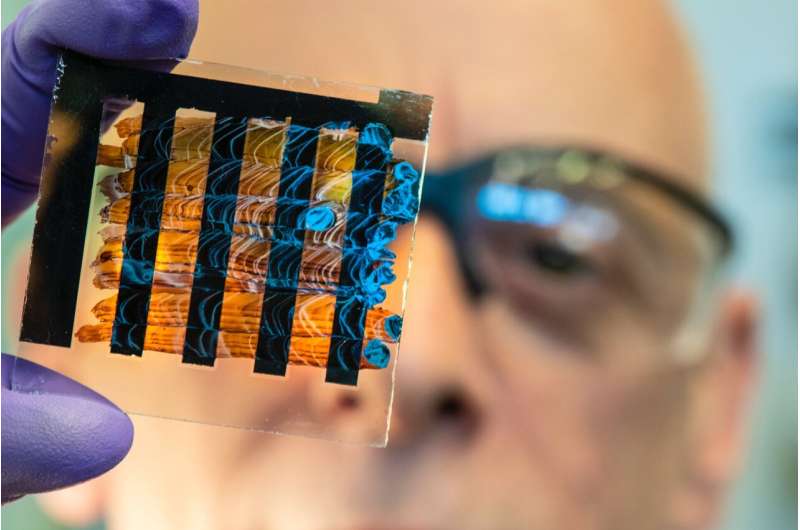

Now, a new computer vision technique developed by MIT engineers significantly speeds up the characterization of newly synthesized electronic materials. The technique automatically analyzes images of printed semiconducting samples and quickly estimates two key electronic properties for each sample: band gap (a measure of electron activation energy) and stability (a measure of longevity).

The new technique accurately characterizes electronic materials 85 times faster compared to the standard benchmark approach.

The researchers intend to use the technique to speed up the search for promising solar cell materials. They also plan to incorporate the technique into a fully automated materials screening system.

“Ultimately, we envision fitting this technique into an autonomous lab of the future,” says MIT graduate student Eunice Aissi. “The whole system would allow us to give a computer a materials problem, have it predict potential compounds, and then run 24-7 making and characterizing those predicted materials until it arrives at the desired solution.”

“The application space for these techniques ranges from improving solar energy to transparent electronics and transistors,” adds MIT graduate student Alexander (Aleks) Siemenn. “It really spans the full gamut of where semiconductor materials can benefit society.”

Aissi and Siemenn detail the new technique in a study appearing in Nature Communications. Their MIT co-authors include graduate student Fang Sheng, postdoc Basita Das, and professor of mechanical engineering Tonio Buonassisi, along with former visiting professor Hamide Kavak of Cukurova University and visiting postdoc Armi Tiihonen of Aalto University.

Once a new electronic material is synthesized, the characterization of its properties is typically handled by a “domain expert” who examines one sample at a time using a benchtop tool called a UV-Vis, which scans through different colors of light to determine where the semiconductor begins to absorb more strongly. This manual process is precise but also time-consuming: A domain expert typically characterizes about 20 material samples per hour—a snail’s pace compared to some printing tools that can lay down 10,000 different material combinations per hour.

“The manual characterization process is very slow,” Buonassisi says. “They give you a high amount of confidence in the measurement, but they’re not matched to the speed at which you can put matter down on a substrate nowadays.”

To speed up the characterization process and clear one of the largest bottlenecks in materials screening, Buonassisi and his colleagues looked to computer vision—a field that applies computer algorithms to quickly and automatically analyze optical features in an image.

“There’s power in optical characterization methods,” Buonassisi notes. “You can obtain information very quickly. There is richness in images, over many pixels and wavelengths, that a human just can’t process but a computer machine-learning program can.”

The team realized that certain electronic properties—namely, band gap and stability—could be estimated based on visual information alone, if that information were captured with enough detail and interpreted correctly.

With that goal in mind, the researchers developed two new computer vision algorithms to automatically interpret images of electronic materials: one to estimate band gap and the other to determine stability.

The first algorithm is designed to process visual data from highly detailed, hyperspectral images.

“Instead of a standard camera image with three channels—red, green, and blue (RBG)—the hyperspectral image has 300 channels,” Siemenn explains. “The algorithm takes that data, transforms it, and computes a band gap. We run that process extremely fast.”

The second algorithm analyzes standard RGB images and assesses a material’s stability based on visual changes in the material’s color over time.

“We found that color change can be a good proxy for degradation rate in the material system we are studying,” Aissi says.

The team applied the two new algorithms to characterize the band gap and stability for about 70 printed semiconducting samples. They used a robotic printer to deposit samples on a single slide, like cookies on a baking sheet. Each deposit was made with a slightly different combination of semiconducting materials. In this case, the team printed different ratios of perovskites—a type of material that is expected to be a promising solar cell candidate, though it is also known to quickly degrade.

“People are trying to change the composition—add a little bit of this, a little bit of that—to try to make [perovskites] more stable and high-performance,” Buonassisi says.

Once they printed 70 different compositions of perovskite samples on a single slide, the team scanned the slide with a hyperspectral camera. Then they applied an algorithm that visually “segments” the image, automatically isolating the samples from the background. They ran the new band gap algorithm on the isolated samples and automatically computed the band gap for every sample. The entire band gap extraction process took about six minutes.

“It would normally take a domain expert several days to manually characterize the same number of samples,” Siemenn says.

To test for stability, the team placed the same slide in a chamber in which they varied the environmental conditions, such as humidity, temperature, and light exposure. They used a standard RGB camera to take an image of the samples every 30 seconds over two hours. They then applied the second algorithm to the images of each sample over time to estimate the degree to which each droplet changed color, or degraded under various environmental conditions. In the end, the algorithm produced a “stability index,” or a measure of each sample’s durability.

As a check, the team compared their results with manual measurements of the same droplets, taken by a domain expert. Compared to the expert’s benchmark estimates, the team’s band gap and stability results were 98.5% and 96.9% as accurate, respectively, and 85 times faster.

“We were constantly shocked by how these algorithms were able to not just increase the speed of characterization, but also to get accurate results,” Siemenn says. “We do envision this slotting into the current automated materials pipeline we’re developing in the lab, so we can run it in a fully automated fashion, using machine learning to guide where we want to discover these new materials, printing them, and then actually characterizing them, all with very fast processing.”

More information:

Using Scalable Computer Vision to Automate High-throughput Semiconductor Characterization, Nature Communications (2024). DOI: 10.1038/s41467-024-48768-2

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.

Citation:

Computer vision method characterizes electronic material properties 85 times faster than conventional approach (2024, June 11)

retrieved 25 June 2024

from https://techxplore.com/news/2024-06-vision-method-characterizes-electronic-material.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Although there is the saying, “straight from the horse’s mouth,” it’s impossible to get a horse to tell you if it’s in pain or experiencing joy. Yet, its body will express the answer in its movements. To a trained eye, pain will manifest as a change in gait, or in the case of joy, the facial expressions of the animal could change. But what if we can automate this with AI? And what about AI models for cows, dogs, cats, or even mice?

Automating animal behavior not only removes observer bias, but it helps humans more efficiently get to the right answer.

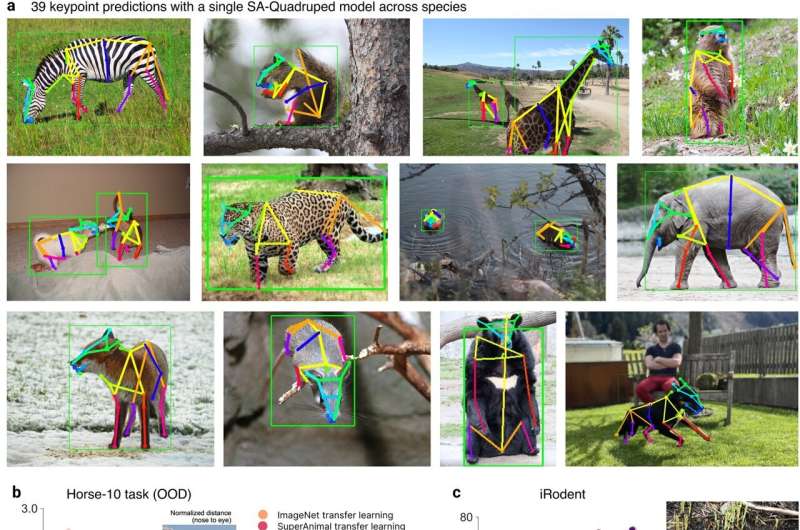

A new study marks the beginning of a new chapter in posture analysis for behavioral phenotyping. Mackenzie Mathis’ laboratory at EPFL has published a Nature Communications article describing a particularly effective new open-source tool that requires no human annotations to get the model to track animals.

Named “SuperAnimal,” it can automatically recognize, without human supervision, the location of “keypoints” (typically joints) in a whole range of animals—over 45 animal species—and even in mythical ones.

“The current pipeline allows users to tailor deep learning models, but this then relies on human effort to identify keypoints on each animal to create a training set,” explains Mathis.

“This leads to duplicated labeling efforts across researchers and can lead to different semantic labels for the same keypoints, making merging data to train large foundation models very challenging. Our new method provides a new approach to standardize this process and train large-scale datasets. It also makes labeling 10 to 100 times more effective than current tools.”

The “SuperAnimal method” is an evolution of a pose estimation technique that Mathis’ laboratory had already distributed under the name “DeepLabCut️.”

“Here, we have developed an algorithm capable of compiling a large set of annotations across databases and train the model to learn a harmonized language—we call this pre-training the foundation model,” explains Shaokai Ye, a Ph.D. student researcher and first author of the study. “Then users can simply deploy our base model or fine-tune it on their own data, allowing for further customization if needed.”

These advances will make motion analysis much more accessible. “Veterinarians could be particularly interested, as well as those in biomedical research—especially when it comes to observing the behavior of laboratory mice. But it can go further,” says Mathis, mentioning neuroscience and… athletes (canine or otherwise). Other species—birds, fish, and insects—are also within the scope of the model’s next evolution.

“We also will leverage these models in natural language interfaces to build even more accessible and next-generation tools. For example, Shaokai and I, along with our co-authors at EPFL, recently developed AmadeusGPT, published recently at NeurIPS, that allows for querying video data with written or spoken text.”

“Expanding this for complex behavioral analysis will be very exciting.”

SuperAnimal is now available to researchers worldwide through its open-source distribution (github.com/DeepLabCut).

More information:

SuperAnimal pretrained pose estimation models for behavioral analysis, Nature Communications (2024). DOI: 10.1038/s41467-024-48792-2

Provided by

Ecole Polytechnique Federale de Lausanne

Citation:

Unifying behavioral analysis through animal foundation models (2024, June 21)

retrieved 25 June 2024

from https://phys.org/news/2024-06-behavioral-analysis-animal-foundation.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.