The eyes of raptors can accurately perceive prey from kilometers away. Is it possible to model camera technology after birds’ eyes? Researchers have developed a new type of camera that is inspired by the structures and functions of birds’ eyes. A research team led by Prof. Kim Dae-Hyeong at the Center for Nanoparticle Research within the Institute for Basic Science (IBS), in collaboration with Prof. Song Young Min at the Gwangju Institute of Science and Technology (GIST), has developed a perovskite-based camera specializing in object detection.

The work is published in the journal Science Robotics.

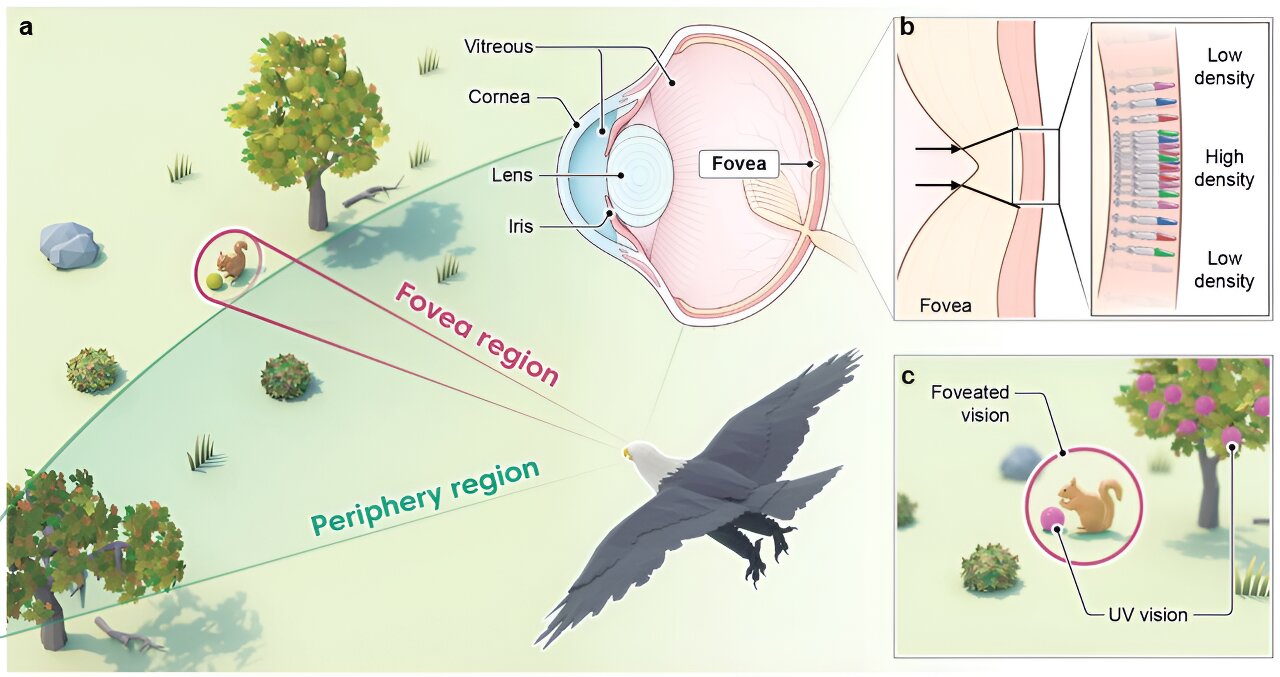

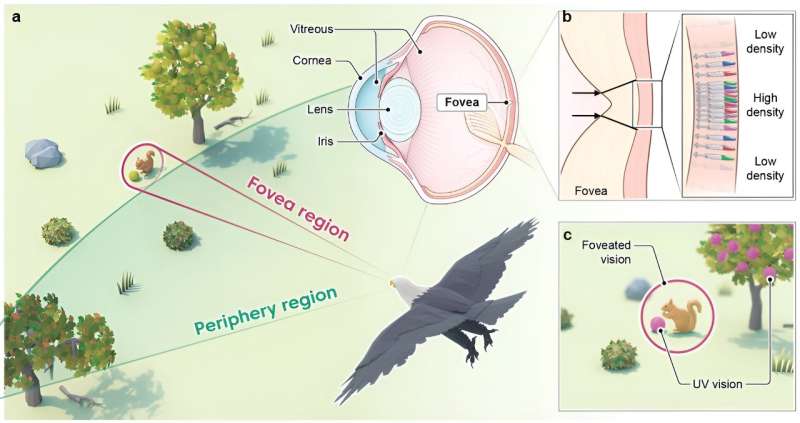

The eyes of different organisms in the natural world have evolved and been optimized to suit their habitat. As a result of countless years of evolutionary adaptation and flying at high altitudes, bird eyes have developed unique structures and visual functions.

In the retina of an animal’s eye, there is a small pit called the fovea that refracts the light entering the eye. Unlike the shallow foveae found in human eyes, bird eyes have deep central foveae, which refract the incoming light to a large extent. The region of the highest cone density lies within the foveae (Figure 1b), allowing the birds to clearly perceive distant objects through magnification (Figure 1c). This specialized vision is known as foveated vision.

While human eyes can only see visible light, bird eyes have four cones that respond to ultraviolet (UV) as well as visible (red, green, blue; RGB) light. This tetrachromatic vision enables birds to acquire abundant visual information and effectively detect target objects in a dynamic environment (Figure 1c).

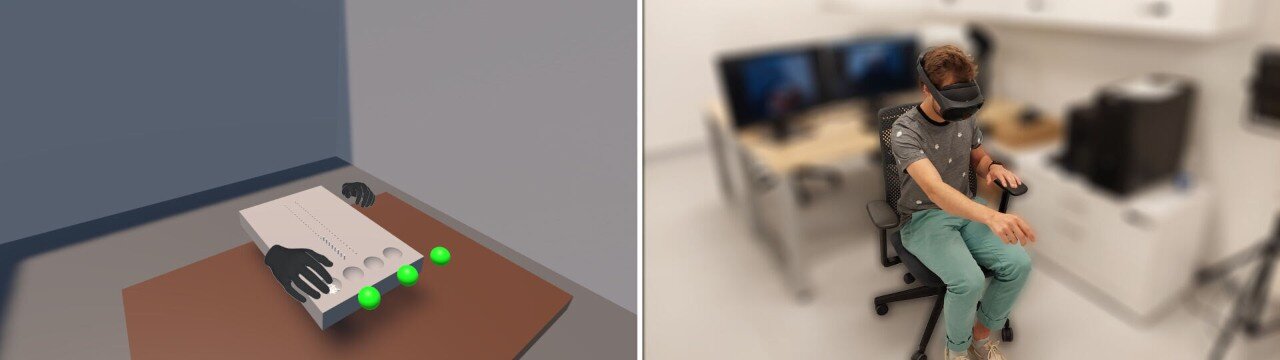

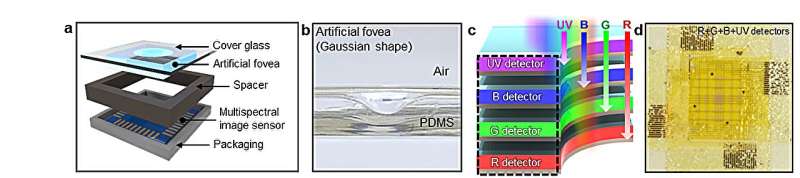

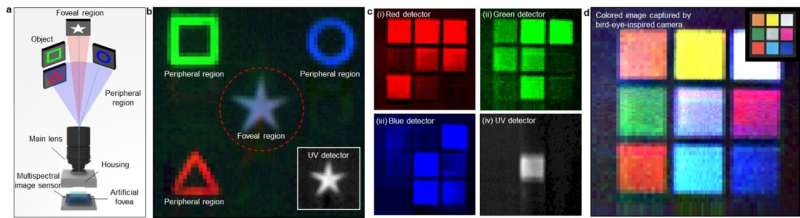

Inspired by these capabilities, the IBS research team designed a new type of camera that specializes in object detection, incorporating artificial fovea and a multispectral image sensor that responds to both UV and RGB (Figure 2a).

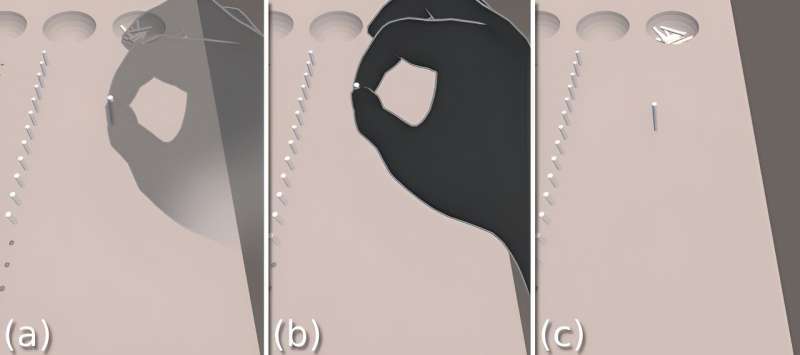

First, the researchers fabricated the artificial fovea by mimicking the deep central foveae in the bird’s eyes (Figure 2b) and optimized the design through the optical simulation. This allows the camera to magnify distant target objects without image distortion.

The team then used perovskite, a material known for its excellent electrical and optical properties, to fabricate the multispectral image sensor. Four types of photodetectors were fabricated using different perovskite materials that absorb different wavelengths. The multispectral image sensor was finally fabricated by vertically stacking the four photodetectors (Figure 2c and 2d).

The first co-author Dr. Park Jinhong states, “We also developed a new transfer process to vertically stack the photodetectors. By using the perovskite patterning method developed in our previous research, we were able to fabricate the multispectral image sensor that can detect UV and RGB without additional color filters.”

Conventional cameras that use a zoom lens to magnify objects have the disadvantage of focusing only on the target object and not its surroundings. On the other hand, the bird-eye-inspired camera provides both a magnified view of the foveal region along with the surrounding view of the peripheral region (Figure 3a and 3b).

By comparing the two fields of vision, the bird-eye-inspired camera can achieve greater motion detection capabilities than the conventional camera (Figure 3c and 3d). In addition, the camera is more cost-effective and lightweight as it can distinguish UV and RGB light without additional color filters.

The research team verified the object recognition and motion detection capabilities of the developed camera through simulations. In terms of object recognition, the new camera demonstrated a confidence score of 0.76, which is about twice as high as the existing camera system’s confidence score of 0.39. The motion detection rate also increased by 3.6 times compared to the existing camera system, indicating significantly enhanced sensitivity to motion.

“Birds’ eyes have evolved to quickly and accurately detect distant objects while in flight. Our camera can be used in areas that need to detect objects clearly, such as robots and autonomous vehicles. In particular, the camera has great potential for application to drones operating in environments similar to those in which birds live,” remarked Prof. Kim.

This innovative camera technology represents a significant advancement in object detection, offering numerous potential applications across various industries.

More information:

Jinhong Park et al, Avian eye–inspired perovskite artificial vision system for foveated and multispectral imaging, Science Robotics (2024). DOI: 10.1126/scirobotics.adk6903

Citation:

Innovative bird eye–inspired camera developed for enhanced object detection (2024, May 30)

retrieved 24 June 2024

from https://techxplore.com/news/2024-05-bird-eyeinspired-camera.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.