Imagine finding your location without GPS. Now apply this to tracking an item in the body. This has been the challenge with tracking “smart” pills—pills equipped with smart sensors–once swallowed. At the USC Viterbi School of Engineering, innovations in wearable electronics and AI have led to the development of ingestible sensors that not only detect stomach gases but also provide real-time location tracking.

Developed by the Khan Lab, these capsules are tailored to identify gases associated with gastritis and gastric cancers. The research, to be published in Cell Reports Physical Science, shows how these smart pills have been accurately monitored through a newly designed wearable system. This breakthrough represents a significant step forward in ingestible technology, which Yasser Khan, an Assistant Professor of Electrical and Computer Engineering at USC, believes could someday serve as a “Fitbit for the gut” and for early disease detection.

While wearables with sensors hold a lot of promise to track body functions, the ability to track ingestible devices within the body has been limited. However, with innovations in materials, the miniaturization of electronics, as well as new protocols developed by Khan, researchers have demonstrated the ability to track the location of devices specifically in the GI tract.

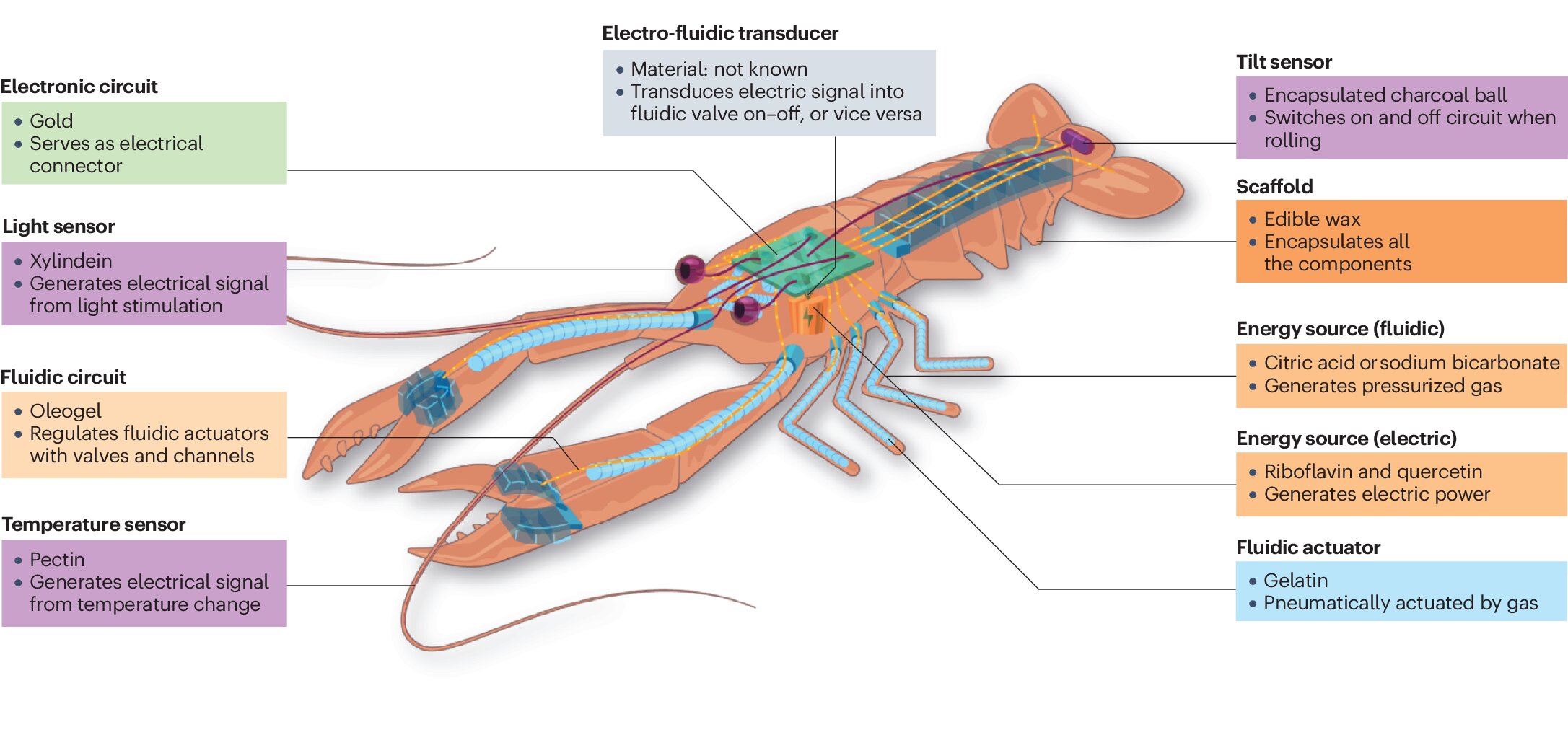

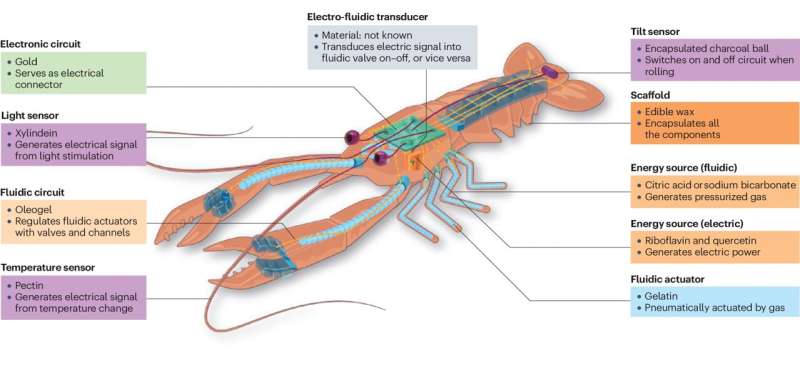

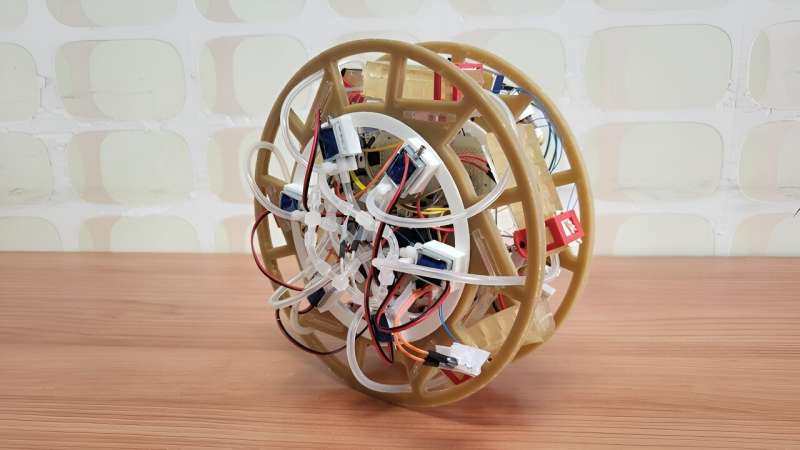

Khan’s team with the USC Institute for Technology and Medical Systems Innovation (ITEMS) at the Michelson Center for Convergent Biosciences, placed a wearable coil that generates a magnetic field on a t-shirt. This field, coupled with a trained neural network, allows his team to locate the capsule within the body. According to Ansa Abdigazy, lead author of the work and a Ph.D. student in the Khan Lab, this has not been demonstrated with a wearable before.

The second innovation within this device is the newly created “sensing” material. Capsules are outfitted not just with electronics for tracking location but with “optical sensing membrane that is selective to gases.” This membrane is comprised of materials whose electrons change their behavior within the presence of ammonia gas.

Ammonia—is a component of H pylori—gut bacteria that, when elevated, could be a signal of peptic ulcer, gastric cancer, or irritable bowel syndrome. Thus, says Khan, “The presence of this gas is a proxy and can be used as an early disease detection mechanism.”

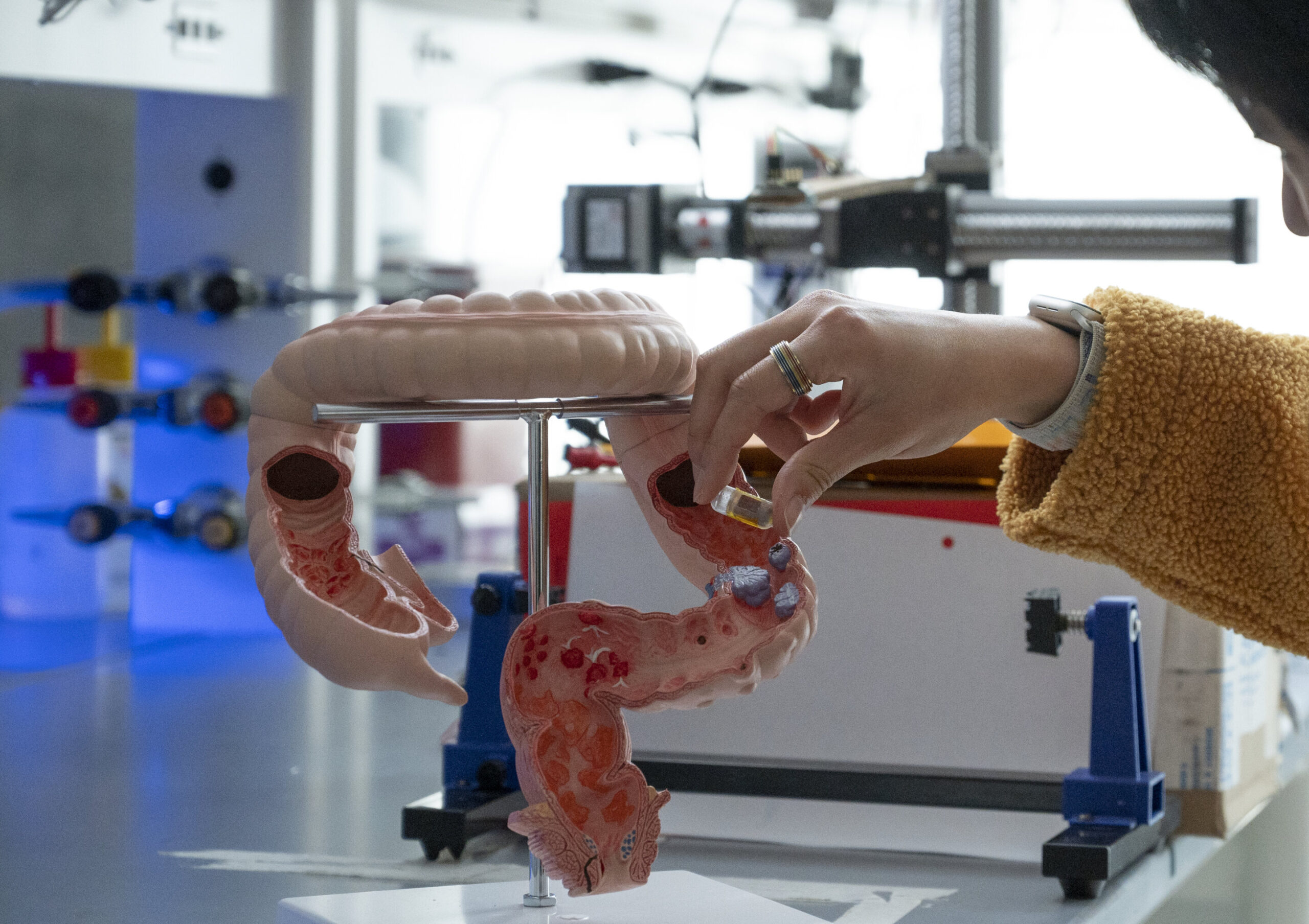

The USC team has tested this ingestible device in many different environments including liquid environments and simulating a bovine intestine. “The ingestible system with the wearable coil is both compact and practical, offering a clear path for application in human health,” says Khan. The device is currently patent pending and the next step is to test these wearables with swine models.

Beyond the use of this device for early detection of peptic ulcers, gastritis, and gastric cancers, there is potential to monitor brain health. How? Because of the brain-gut axis. Neurotransmitters reside in the gut and “how they’re upregulated and downregulated have a correlation to neurodegenerative diseases,” says Khan.

This focus on the brain is the ultimate goal of Khan’s research. He is interested in developing non-invasive ways to detect neurotransmitters related to Parkinson’s and Alzheimer’s.

More information:

Angsagan Abdigazy et al, 3D gas mapping in the gut with AI-enabled ingestible and wearable electronics, Cell Reports Physical Science (2024). DOI: 10.1016/j.xcrp.2024.101990

Citation:

From wearables to swallowables: Engineers create GPS-like smart pills with AI (2024, June 14)

retrieved 24 June 2024

from https://techxplore.com/news/2024-06-wearables-swallowables-gps-smart-pills.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.