American Express will acquire the dining reservation and event management platform Tock from Squarespace for $400 million cash.

AmEx began making acquisitions in the dining and event space with its purchase of Resy five years ago, giving cardmembers access to hard-to-get restaurants and locations. Other credit card issues have done the same. JPMorgan acquired The Infatuation as a lifestyle brand in 2021.

Tock, which launched in Chicago in 2014 and has been owned by Squarespace since 2021, provides reservation and table management services to roughly 7,000 restaurants and other venues. Restaurants signed up with Tock include Aquavit, the high end Nordic restaurant in New York, as well as the buzzy new restaurant Chez Noir in California.

Squarespace and Tock confirmed the deal Friday.

AmEx’s purchase of Resy five years ago raised a lot of eyebrows in both the credit card and dining industries, but it’s become a key part of how the company locks in high-end businesses to be either AmEx-exclusive merchants, or ones that give preferential treatment to AmEx cardmembers. The number of restaurants on the Resy platform has grown five fold since AmEx purchased the company.

AmEx also announced Friday it would buy Rooam, a contactless payment platform that is used heavily in stadiums and other entertainment venues. AmEx did not disclose how much it was paying for Rooam.

© 2024 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

Citation:

AmEx buys dining reservation company Tock from Squarespace for $400M (2024, June 21)

retrieved 24 June 2024

from https://techxplore.com/news/2024-06-amex-buys-dining-reservation-company.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

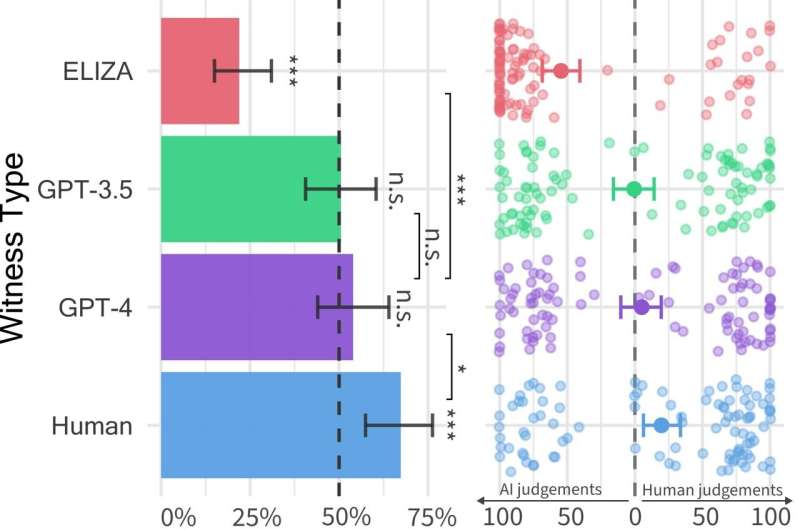

![A selection of conversations between human interrogators (green) and witnesses (grey). One of these four conversations is with a human witness, the rest are with AI. Interrogator verdicts and ground truth identities are below (to allow readers to indirectly participate). [A) Verdict: Human (100% confidence) Took a while to Google Addis Ababa. Ground Truth: GPT-4; B) Verdict: AI (100% confidence) Long time for responses, wouldn't tell me a specific place they grew up. Ground Truth: Human; C) Verdict: Human (100% confidence) He seems very down to earth and speaks naturally. Ground Truth: GPT-3.5; D) Verdict: AI (67% confidence), Did not put forth any effort to convince me they were human and the responses were odd, Ground Truth: ELIZA.] Credit: Jones and Bergen. People struggle to tell humans apart from ChatGPT in five-minute chat conversations](https://scx1.b-cdn.net/csz/news/800a/2024/people-struggle-to-tel.jpg)

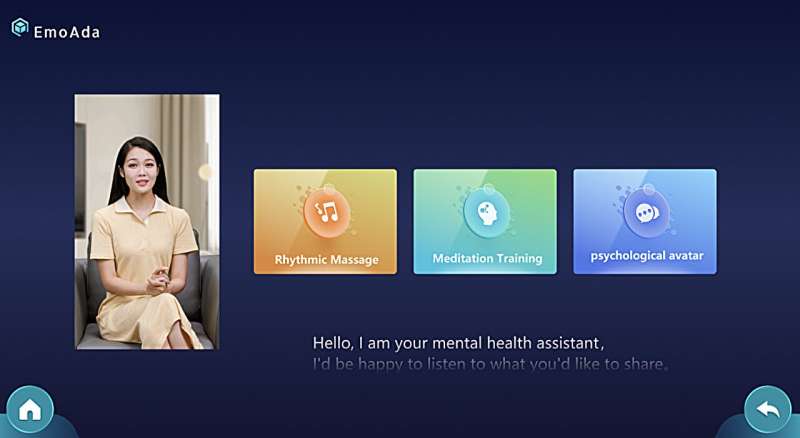

![The architecture of the Multimodal Emotion Interaction Large Language Model. The researchers used the open-source model baichuan13B-chat as the foundation, integrating deep feature vectors extracted from visual, text, and audio models through an MLP layer into baichuan13B-chat. They employed a Mixture-of-Modality Adaptation technique to achieve multimodal semantic alignment, enabling the LLM model to process multimodal information. The team also constructed a multimodal emotion fine-tuning dataset, including open-source PsyQA dataset and a team-collected dataset of psychological interview videos. Using HuggingGPT[6], they developed a multimodal fine-tuning instruction set to enhance the model's multimodal interaction capabilities. The researchers are creating a psychological knowledge graph to improve the model's accuracy in responding to psychological knowledge and reduce model hallucinations. By combining these various techniques, MEILLM can perform psychological assessments, conduct psychological interviews using psychological scales, and generate psychological assessment reports for users. MEILLM can also create comprehensive psychological profiles for users, including emotions, moods, and personality, and provides personalized psychological guidance based on the user's psychological profile. MEILLM offers users a more natural and humanized emotional support dialogue and a personalized psychological adaptation report. Credit: Dong et al An AI system that offers emotional support via chat](https://scx1.b-cdn.net/csz/news/800a/2024/an-ai-system-that-offe-1.jpg)