The space environment is harsh and full of extreme radiation. Scientists designing spacecraft and satellites need materials that can withstand these conditions.

In a paper published in January 2024 in Nature Communications, my team of materials researchers demonstrated that a next-generation semiconductor material called metal-halide perovskite can actually recover and heal itself from radiation damage.

Metal-halide perovskites are a class of materials discovered in 1839 that are found abundantly in Earth’s crust. They absorb sunlight and efficiently convert it into electricity, making them a potentially good fit for space-based solar panels that can power satellites or future space habitats.

Researchers make perovskites in the form of inks, then coat the inks onto glass plates or plastic, creating thin, filmlike devices that are lightweight and flexible.

Surprisingly, these thin-film solar cells perform as well as conventional silicon solar cells in laboratory demonstrations, even though they are almost 100 times thinner than traditional solar cells.

But these films can degrade if they’re exposed to moisture or oxygen. Researchers and industry are currently working on addressing these stability concerns for terrestrial deployment.

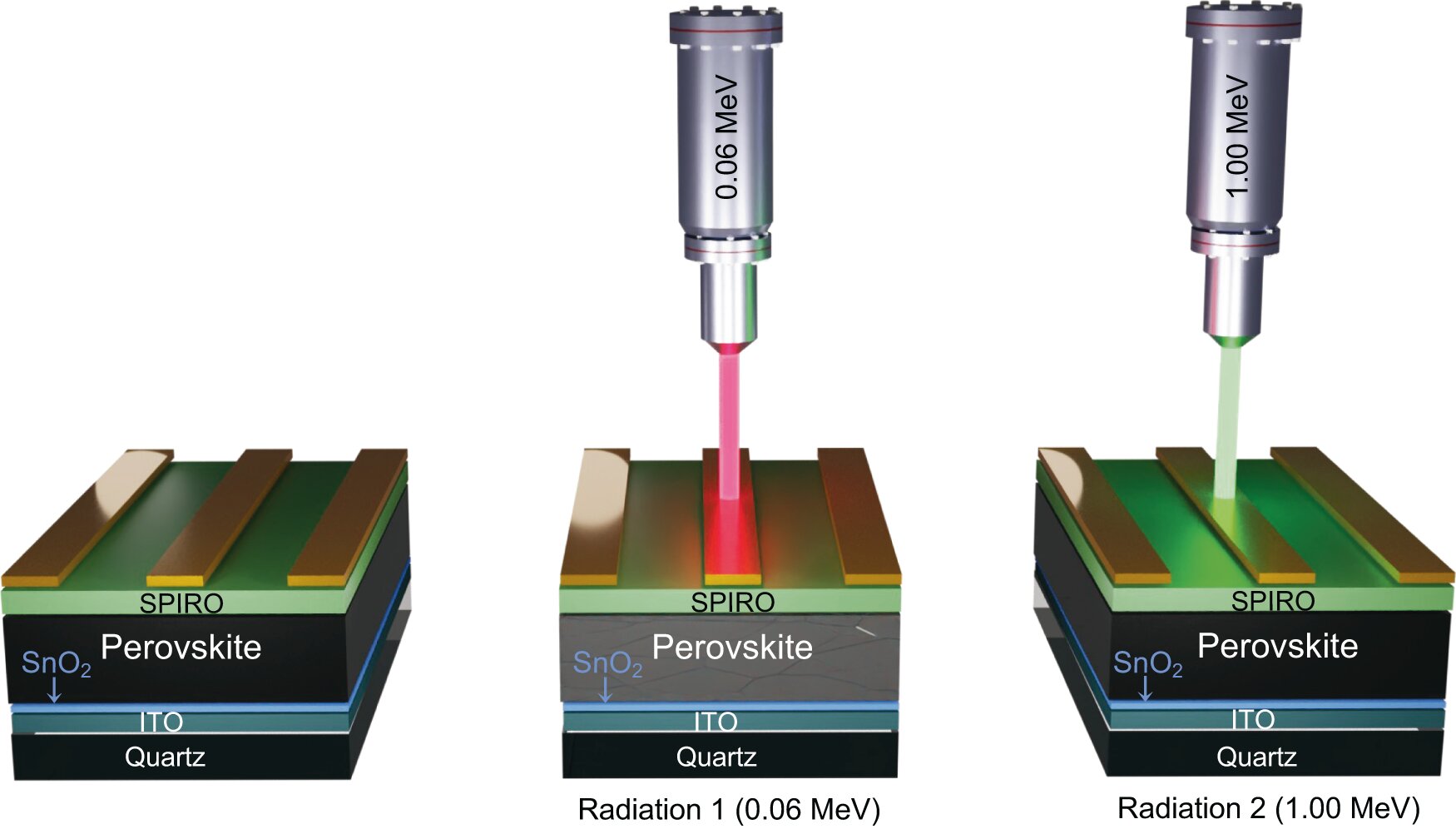

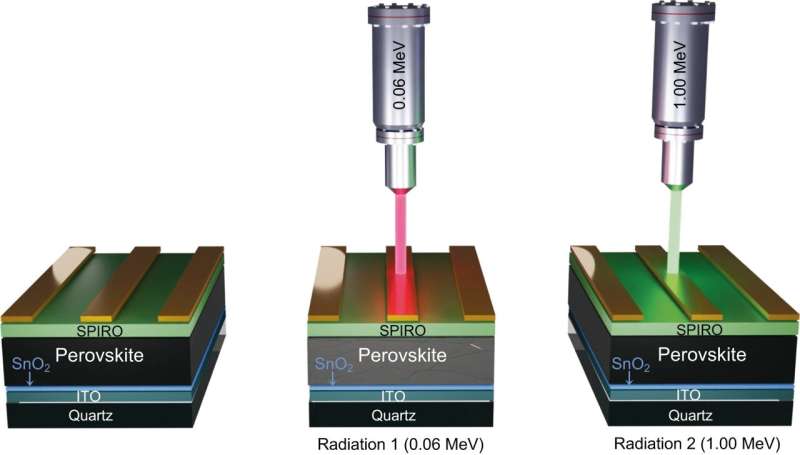

To test how they might hold up in space, my team developed a radiation experiment. We exposed perovskite solar cells to protons at both low and high energies and found a unique, new property.

The high-energy protons healed the damage caused by the low-energy protons, allowing the device to recover and continue doing its job. The conventional semiconductors used for space electronics do not show this healing.

My team was surprised by this finding. How can a material that degrades when exposed to oxygen and moisture not only resist the harsh radiation of space but also self-heal in an environment that destroys conventional silicon semiconductors?

In our paper, we started to unravel this mystery.

Why it matters

Scientists predict that in the next 10 years, satellite launches into near-Earth orbit will increase exponentially, and space agencies such as NASA aim to establish bases on the moon.

Materials that can tolerate extreme radiation and self-heal would change the game.

Researchers estimate that deploying just a few pounds of perovskite materials into space could generate up to 10,000,000 watts of power. It currently costs about US$4,000 per kilogram ($1,818 per pound) to launch materials into space, so efficient materials are important.

What still isn’t known

Our findings shed light on a remarkable aspect of perovskites—their tolerance to damage and defects. Perovskite crystals are a type of soft material, which means that their atoms can move into different states that scientists call vibrational modes.

Atoms in perovskites are normally arranged in a lattice formation. But radiation can knock the atoms out of position, damaging the material. The vibrations might help reposition the atoms back into place, but we’re still not sure exactly how this process works.

What’s next?

Our findings suggest that soft materials might be uniquely helpful in extreme environments, including space.

But radiation isn’t the only stress that materials have to weather in space. Scientists don’t yet know how perovskites will fare when exposed to vacuum conditions and extreme temperature variations, along with radiation, all at once. Temperature could play a role in the healing behavior my team saw, but we’ll need to conduct more research to determine how.

These results tell us that soft materials could help scientists develop technology that works well in extreme environments. Future research could dive deeper into how the vibrations in these materials relate to any self-healing properties.

More information:

Ahmad R. Kirmani et al, Unraveling radiation damage and healing mechanisms in halide perovskites using energy-tuned dual irradiation dosing, Nature Communications (2024). DOI: 10.1038/s41467-024-44876-1

Provided by

The Conversation

This article is republished from The Conversation under a Creative Commons license. Read the original article.![]()

Citation:

Space radiation can damage satellites—next-generation material could self-heal when exposed to cosmic rays (2024, June 24)

retrieved 25 June 2024

from https://phys.org/news/2024-06-space-satellites-generation-material-exposed.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.