Worldwide, humans are living longer than ever before. According to data from the United Nations, approximately 13.5% of the world’s people were at least 60 years old in 2020, and by some estimates, that figure could increase to nearly 22% by 2050.

Advanced age can bring cognitive and/or physical difficulties, and with more and more elderly individuals potentially needing assistance to manage such challenges, advances in technology may provide the necessary help.

One of the newest innovations comes from a collaboration between researchers at Spain’s Universidad Carlos III and the manufacturer Robotnik. The team has developed the Autonomous Domestic Ambidextrous Manipulator (ADAM), an elderly care robot that can assist people with basic daily functions. The team reports on its work in Frontiers in Neurorobotics.

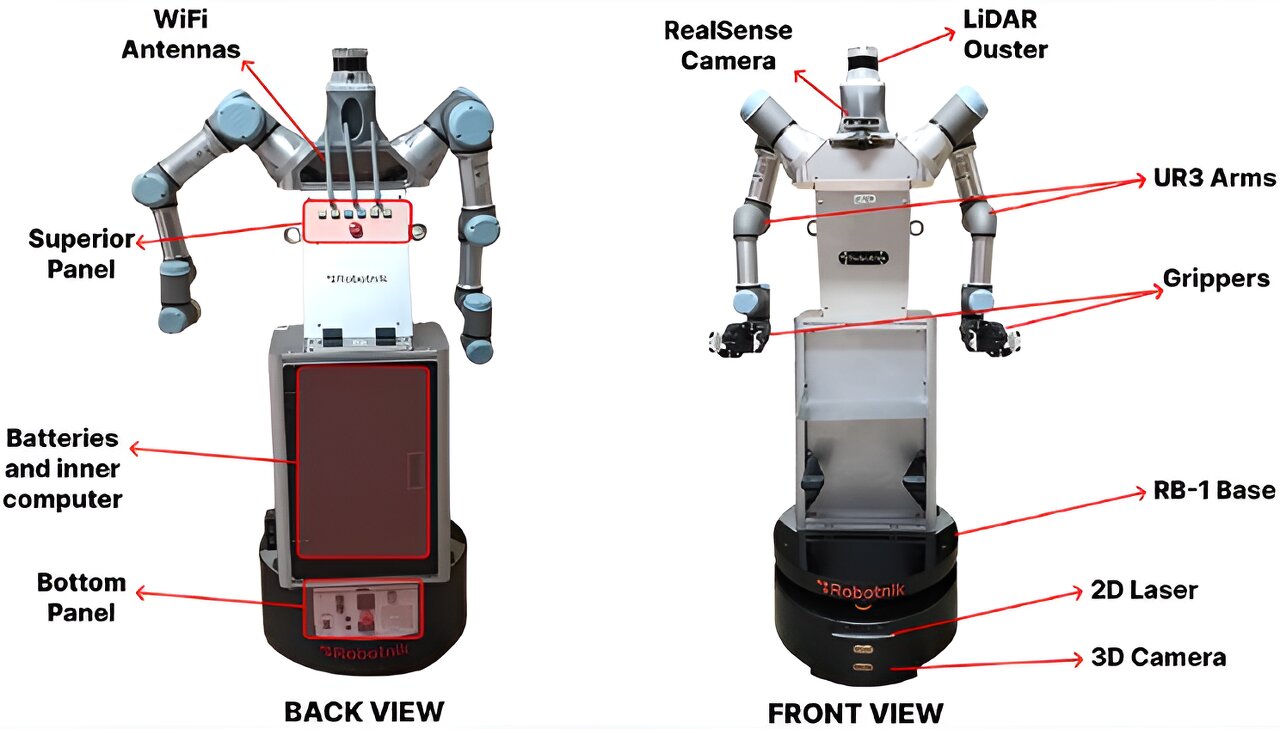

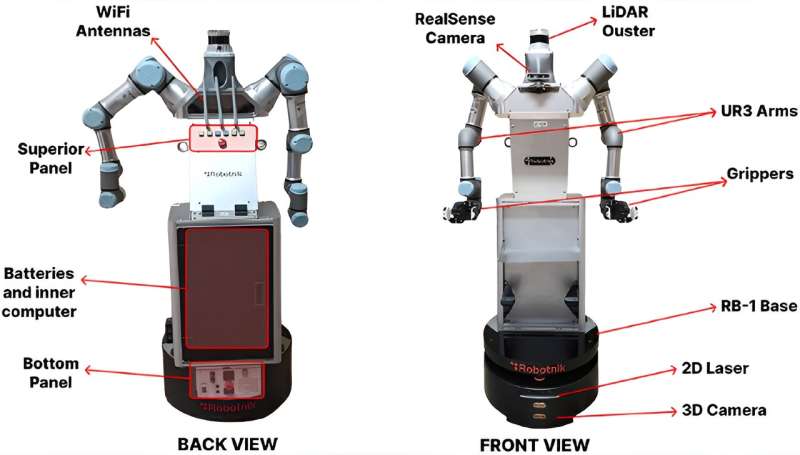

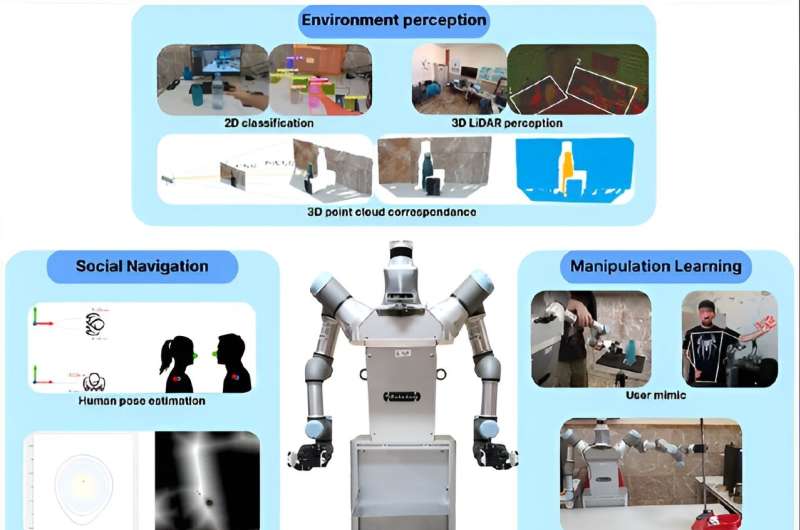

ADAM, an indoor mobile robot that stands upright, features a vision system and two arms with grippers. It can adapt to homes of different sizes for safe and optimal performance. It respects users’ personal space while helping with domestic tasks and learning from its experiences via an imitation learning method.

On a practical level, ADAM can pass through doors and perform everyday tasks such as sweeping a floor, moving objects and furniture as needed, setting a table, pouring water, preparing a simple meal, and bringing items to a user upon request.

In their review of existing developments in this arena, the researchers describe several robots that have been recently developed and adapted to assist elderly individuals with both cognitive tasks (such as memory training and games to help alleviate dementia symptoms) and physical tasks (such as detection of falls by users, followed by notification actions; monitoring and assisting users with managing usage of home automation systems; and providing assistance such as retrieving items from the floor and storing items in user-inaccessible areas in the home).

Against this backdrop, the team behind this new work aimed to design a robot with unique features to assist users with physical tasks in their own homes.

Next-level personal care through modular design and a learning platform

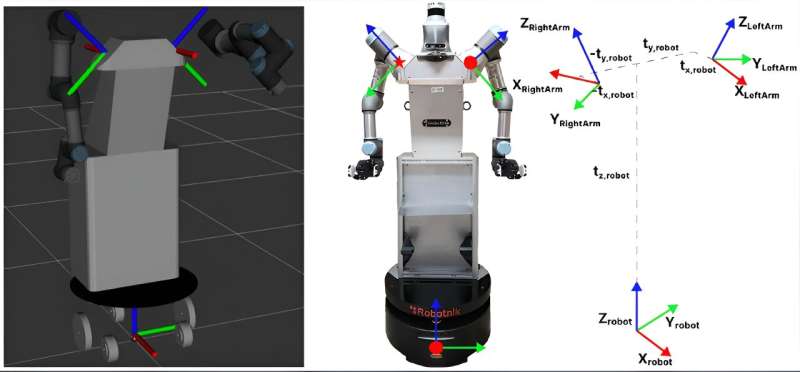

Several features set ADAM apart from existing personal care robots. The first is its modular design, which includes a base, cameras, arms and hands providing multiple sensory inputs. Each of these units can work independently or cooperatively at a high or low level. Importantly, this means that the robot can support research while meeting users’ personal care needs.

In addition, ADAM’s arms themselves are collaborative, allowing for user operation, and can move according to the parameters of the immediate environment. Moreover, as a basic safety feature of the robot’s design, it continuously considers the people present in the environment in order to avoid collisions while providing personal care.

Technical aspects

ADAM stands 160 cm tall—about the height of a petite human adult. Its arms, whose maximum load capacity is 3 kg, extend to a width of 50 cm. The researchers point out that they designed the robot to “simulate the structure of a human torso and arms. This is because a human-like structure allows it to work more comfortably in domestic environments because the rooms, doors, and furniture are adapted to humans.”

Batteries in ADAM’s base power its movements, cameras, and 3D LiDAR sensors. With all systems running, the robot’s minimum battery life is just under four hours, and battery charging takes a little over two hours. It can rotate in place and move forward and backward, but not laterally.

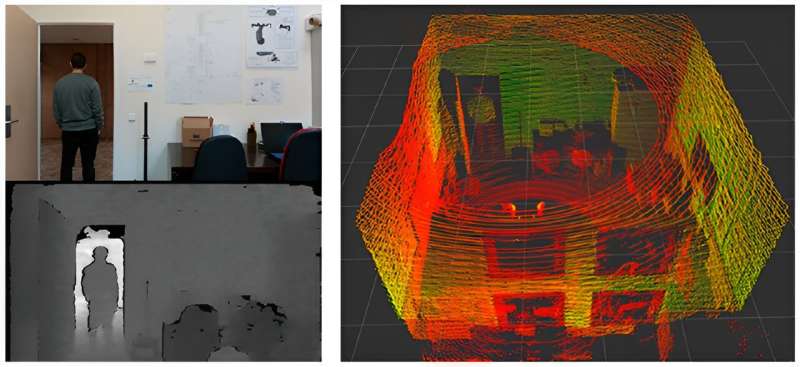

ADAM includes two internally connected computers—one for the base and the other for the arms—and a WiFi module for external communication. An RGBD camera and 2D LiDAR help to control basic forward movement, complemented by additional RGBD and LiDAR sensors positioned higher in the unit that expand its perception angle and range.

The additional RGBD sensor is a Realsense D435 depth camera that includes an RGB module and infrared stereo vision, while the additional LiDAR sensor provides 3D spatial details that work with a geometric mapping algorithm to map the entirety of objects in the environment.

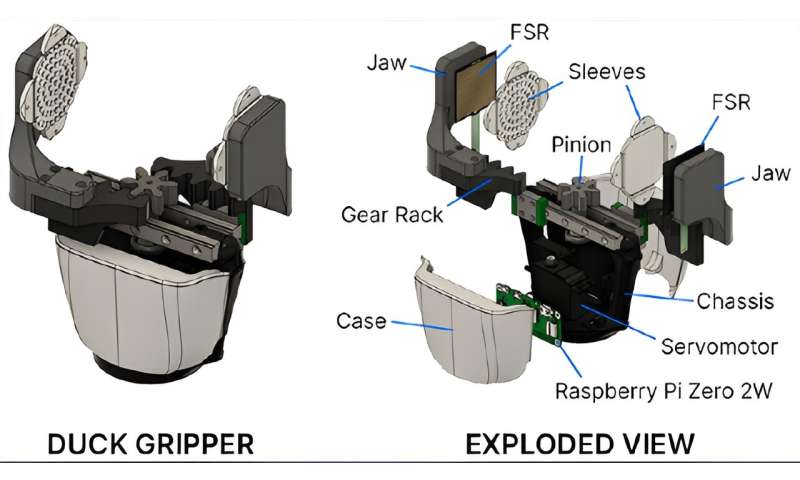

The approximate range of motion of ADAM’s arms is 360o, and a parallel gripper system (the “Duck Gripper”) comprises its hands. Within this system is an independent power supply and a Raspberry Pi Zero 2 W board that communicates via WiFi to a corresponding robot operating system (ROS) node. Force-sensing resistors (FSRs) on each gripper jaw help the hands grasp and pick up objects with appropriate amounts of force.

Acing an early test involving collaboration

The researchers report that they have successfully tested ADAM as part of the Heterogeneous Intelligent Multi-Robot Team for Assistance of Elderly People (HIMTAE) project. Collaborating with researchers from Spain’s University of Cartagena and Sweden’s University of Örebro, they presented ADAM as an integral part of a team including multiple robots and home automation systems.

Within the test, another robot (“Robwell”) had established an “empathetic relationship” with users, who wore bracelets to monitor their mental and physical states and communicate them to Robwell.

Roswell, in turn, would remind the users to drink water when needed and communicate with both the home automation system and ADAM regarding specific user needs. ADAM’s role was to perform tasks within the kitchen, preparing and delivering food or water to Robwell, which would then provide it to the users.

The users who participated in the test returned an average value of 93% satisfaction with its outcome. The researchers note that employing two robots was effective; Robwell could monitor and engage with users while ADAM worked in the kitchen. Users were also able to enter the kitchen and interact with ADAM while it performed tasks, and ADAM could likewise interact with users while they performed tasks.

-

Duck Gripper final design with an exploded view of the gripper and its main components. Credit: Frontiers in Neurorobotics (2024). DOI: 10.3389/fnbot.2024.1337608

-

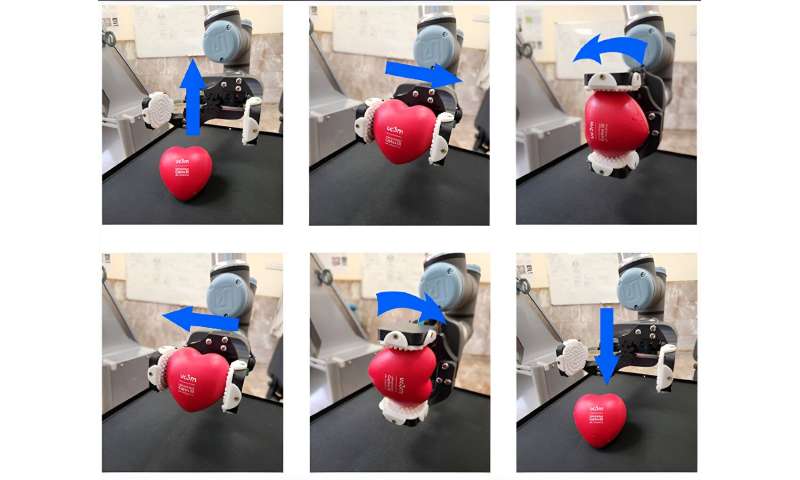

Duck Gripper performance test movements. From left to right, and from top to bottom. The gripper grabs the object on the workstation. The gripper displaces away from the robot. The end effector rotates 90° clockwise. The gripper displaces toward the robot. The end effector rotates 90° counterclockwise. The gripper opens to release the object. Credit: Frontiers in Neurorobotics (2024). DOI: 10.3389/fnbot.2024.1337608

What’s needed next?

As the HIMTAE test results were obtained within a controlled laboratory environment, the team cautions that future tests must take place in authentic domestic environments to determine user satisfaction with ADAM’s performance.

Looking ahead, the researchers observe, “The perception system is fixed, so in certain situations, ADAM will not be able to detect specific parts of the environment. The bimanipulation capabilities of ADAM are not fully developed, and the arms configuration is not optimized.” In addition to focusing on improvements in these areas, they write that “new task and motion planning strategies will be implemented to deal with more complex home tasks, which will make ADAM a much more complete robot companion for elderly care.”

More information:

Alicia Mora et al, ADAM: a robotic companion for enhanced quality of life in aging populations, Frontiers in Neurorobotics (2024). DOI: 10.3389/fnbot.2024.1337608

© 2024 Science X Network

Citation:

A novel elderly care robot could soon provide personal assistance, enhancing seniors’ quality of life (2024, February 19)

retrieved 24 June 2024

from https://techxplore.com/news/2024-02-elderly-robot-personal-seniors-quality.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.