Both living creatures and AI-driven machines need to act quickly and adaptively in response to situations. In psychology and neuroscience, behavior can be categorized into two types—habitual (fast and simple but inflexible), and goal-directed (flexible but complex and slower).

Daniel Kahneman, who won the Nobel Prize in Economic Sciences, distinguishes between these as System 1 and System 2. However, there is ongoing debate as to whether they are independent and conflicting entities or mutually supportive components.

Scientists from the Okinawa Institute of Science and Technology (OIST) and Microsoft Research Asia in Shanghai have proposed a new AI method in which systems of habitual and goal-directed behaviors learn to help each other.

Through computer simulations that mimicked the exploration of a maze, the method quickly adapts to changing environments and also reproduced the behavior of humans and animals after they had been accustomed to a certain environment for a long time.

The study, published in Nature Communications, not only paves the way for the development of systems that adapt quickly and reliably in the burgeoning field of AI, but also provides clues to how we make decisions in the fields of neuroscience and psychology.

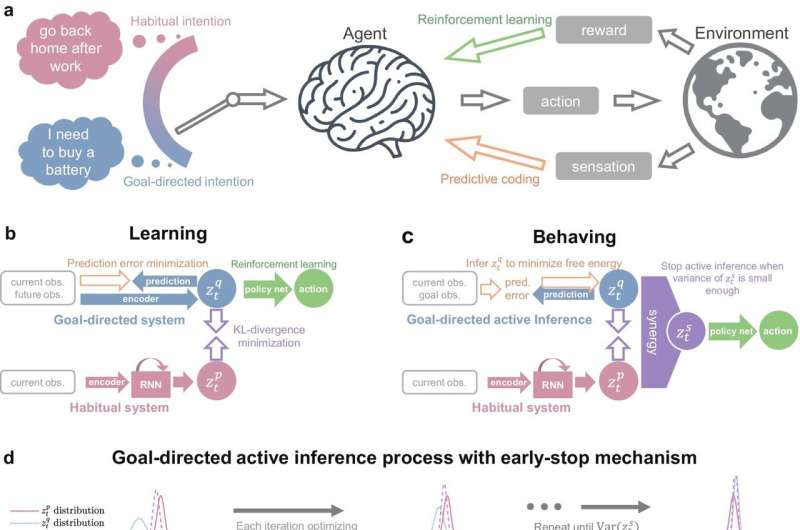

The scientists derived a model that integrates habitual and goal-directed systems for learning behavior in AI agents that perform reinforcement learning, a method of learning based on rewards and punishments, based on the theory of “active inference,” which has been the focus of much attention recently.

In the paper, they created a computer simulation mimicking a task in which mice explore a maze based on visual cues and are rewarded with food when they reach the goal.

They examined how these two systems adapt and integrate while interacting with the environment, showing that they can achieve adaptive behavior quickly. It was observed that the AI agent collected data and improved its own behavior through reinforcement learning.

What our brains prefer

After a long day at work, we usually head home on autopilot (habitual behavior). However, if you have just moved house and are not paying attention, you might find yourself driving back to your old place out of habit.

When you catch yourself doing this, you switch gears (goal-directed behavior) and reroute to your new home. Traditionally, these two behaviors are considered to work independently, resulting in behavior being either habitual and fast but inflexible, or goal-directed and flexible but slow.

“The automatic transition from goal-directed to habitual behavior during learning is a very famous finding in psychology. Our model and simulations can explain why this happens: The brain would prefer behavior with higher certainty. As learning progresses, habitual behavior becomes less random, thereby increasing certainty. Therefore, the brain prefers to rely on habitual behavior after significant training,” Dr. Dongqi Han, a former Ph.D. student at OIST’s Cognitive Neurorobotics Research Unit and first author of the paper, explained.

For a new goal that AI has not trained for, it uses an internal model of the environment to plan its actions. It does not need to consider all possible actions but uses a combination of its habitual behaviors, which makes planning more efficient.

This challenges traditional AI approaches which require all possible goals to be explicitly included in training for them to be achieved. In this model, each desired goal can be achieved without explicit training but by flexibly combining learned knowledge.

“It’s important to achieve a kind of balance or trade-off between flexible and habitual behavior,” Prof. Jun Tani, head of the Cognitive Neurorobotics Research Unit stated. “There could be many possible ways to achieve a goal, but to consider all possible actions is very costly, therefore goal-directed behavior is limited by habitual behavior to narrow down options.”

Building better AI

Dr. Han got interested in neuroscience and the gap between artificial and human intelligence when he started working on AI algorithms. “I started thinking about how AI can behave more efficiently and adaptably, like humans. I wanted to understand the underlying mathematical principles and how we can use them to improve AI. That was the motivation for my Ph.D. research.”

Understanding the difference between habitual and goal-directed behaviors has important implications, especially in the field of neuroscience, because it can shed light on neurological disorders such as ADHD, OCD, and Parkinson’s disease.

“We are exploring the computational principles by which multiple systems in the brain work together. We have also seen that neuromodulators such as dopamine and serotonin play a crucial role in this process,” Prof. Kenji Doya, head of the Neural Computation Unit explained.

“AI systems developed with inspiration from the brain and proven capable of solving practical problems can serve as valuable tools in understanding what is happening in the brains of humans and animals.”

Dr. Han would like to help build better AI that can adapt their behavior to achieve complex goals.

“We are very interested in developing AI that have near human abilities when performing everyday tasks, so we want to address this human-AI gap. Our brains have two learning mechanisms, and we need to better understand how they work together to achieve our goal.”

More information:

Dongqi Han et al, Synergizing habits and goals with variational Bayes, Nature Communications (2024). DOI: 10.1038/s41467-024-48577-7

Citation:

Simplicity versus adaptability: Scientists propose AI method that integrates habitual and goal-directed behaviors (2024, June 14)

retrieved 24 June 2024

from https://techxplore.com/news/2024-06-simplicity-scientists-ai-method-habitual.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

![A selection of conversations between human interrogators (green) and witnesses (grey). One of these four conversations is with a human witness, the rest are with AI. Interrogator verdicts and ground truth identities are below (to allow readers to indirectly participate). [A) Verdict: Human (100% confidence) Took a while to Google Addis Ababa. Ground Truth: GPT-4; B) Verdict: AI (100% confidence) Long time for responses, wouldn't tell me a specific place they grew up. Ground Truth: Human; C) Verdict: Human (100% confidence) He seems very down to earth and speaks naturally. Ground Truth: GPT-3.5; D) Verdict: AI (67% confidence), Did not put forth any effort to convince me they were human and the responses were odd, Ground Truth: ELIZA.] Credit: Jones and Bergen. People struggle to tell humans apart from ChatGPT in five-minute chat conversations](https://scx1.b-cdn.net/csz/news/800a/2024/people-struggle-to-tel.jpg)